A B C D E F G H I J K L M N O P Q R S T U V W X Y Z

This glossary is a work in progress. Several named entries haven't been created yet, and other planned entries await addition to the list.

For your questions or thoughts, comments are open, way down at the bottom of the page, or as always, you can send me an email (see my profile on the right for email address).

Absolutism

Aristotelian Logic

Base Rate Fallacy

Bayes' Theorem

Bernoulli Urn Rule

Binomial Distribution

Boolean Algebra

Calibration

Cognitive Bias

Combination

Confidence Interval

Conjunction

Consequentialism

Cumulative Distribution Function

Deduction

Derivative

Disjunction

Effect Size

Evidence

Expectation

Extended sum rule

False Negative Rate

False Positive Rate

Falsifiability Principle

Frequency Interpretation

Gaussian

Hypothesis Test

Indifference

Induction

Integral

Joint Probability

Knowledge

Legitimate Objections to Science (no link: empty category)

Likelihood Function

Logical Dependence

Marginal Distribution

Maximum Entropy Principle

Maximum Likelihood

Mean

Median

Meta-analysis

Mind Projection Fallacy

Model Comparison

Morality

Noise

Normal Distribution

Normalization

Not

Null Hypothesis

Null Hypothesis Significance Test

Ockham's Razor

Odds

p-value

Parameter Estimation

Permutation

Philosophy

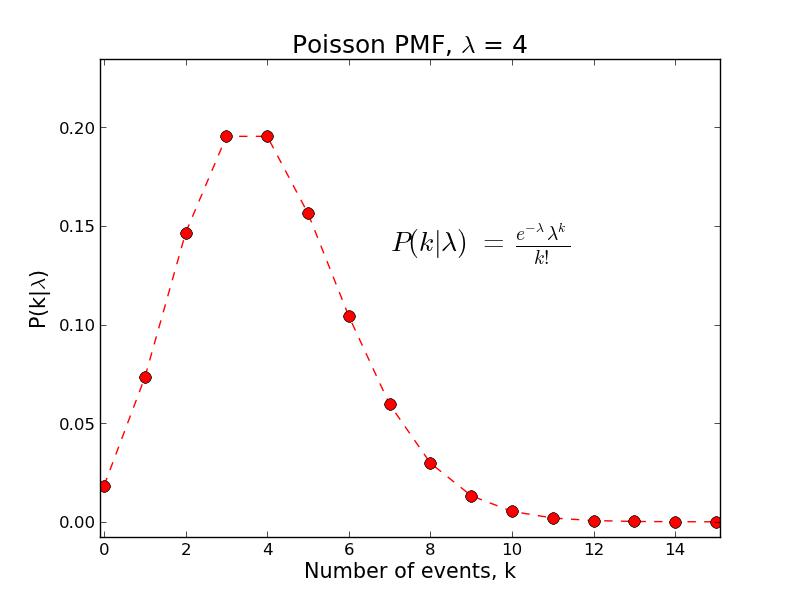

Poisson Distribution

Posterior Probability

Prior Probability

Probability

Probability Distribution Function

Product Rule

Quantile Function

Rationality

Regression to the mean

Sampling Distribution

Science

Sensitivity

Specificity

Standard Deviation

Statistical Significance

Student Distribution

Sum Rule

Survival Function

Theory Ladenness

Utilitarianism

XOR

Absolutism

Absolutism is a historical and still prevalent form of moral philosophy. It asserts that certain behaviours are improper, independent of their consequences. According to this philosophy, if a behaviour is improper, then this constitutes an absolute moral principle, and the behaviour is improper under all possible circumstances.

This approach to morality is demonstrably incorrect, as explained in two blog articles: Scientific Morality and Crime and Punishment.

A standard contrasting view of morality is consequentialism.

Related Entries:

MoralityConsequentialism

Related Blog Posts:

Scientific Morality

Crime and Punishment

Back to top

Base-Rate Fallacy

The base-rate fallacy is a common failing of informal human thought, and some formal methodologies, consisting of failure to make use of prior information when performing inferences based on new data.

As Bayes' theorem shows, the posterior probability for a proposition is proportional to the product of the prior probability and the likelihood function, and so failure to account for the prior probability can lead to results deviating considerably from Bayes' theorem. Any such deviation constitutes an irrational inference.

Essentially, the base rate fallacy consists of mistaking the likelihood function for the posterior, that is, thinking that P(D | HI) is necessarily the same thing as P(H | DI), which it most certainly isn't.

A famous example, whereby doctors were found to commonly overestimate by a factor of 10 the probability that patients suffered from a certain disease, based on their observed symptoms, is analyzed in the blog post, The Base Rate Fallacy.

Null-hypothesis significance testing performs inference based on something approximately equal to P(D | HI) (ignoring temporarily the peculiar practice of tail integration), and so can also be seen to be guilty of the base-rate fallacy.

Maximum likelihood parameter estimation, another popular technique among scientists, also looks exclusively at the likelihood function, and therefore also deviates from rational inference in cases where the prior information is not uniform over the parameter space.

Related Entries:

Related Blog Posts:

The Base Rate Fallacy looks at simple probability estimation that often goes wrong

Fly Papers and Photon Detectors examines the breakdown of maximum likelihood

The Insignificance of Significance Tests studies how null-hypothesis significance testing fails to meet the needs of science

Back to top

Bayes' Theorem

Bayes' theorem is a simple algorithm for updating the probability assigned to a proposition by incorporating new information. The initial probability is termed the prior, and the updated probability is termed the posterior. Bayes' theorem constitutes the model for optimal acquisition of knowledge, and any ranking of the reliability of propositions that differs markedly from the results of Bayes' theorem is termed irrational.

The theorem is named after Thomas Bayes, despite its having been known to earlier authors. Bayes' posthumously published work was ground breaking, however, in its approach to probabilistic inference.

The theorem is named after Thomas Bayes, despite its having been known to earlier authors. Bayes' posthumously published work was ground breaking, however, in its approach to probabilistic inference.

The derivation of Bayes' theorem follows trivially from the product rule, two equivalent forms of which are:

P(AB | C) = P(A | C) × P(B | AC)

and

P(AB | C) = P(B | C) × P(A | BC)

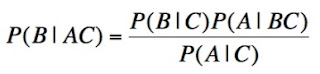

Equating the right hand sides of each of these and dividing one factor across yields Bayes' theorem in its most general form:

Above, P(B | C) is the prior probability for B, conditional upon C. A is some information additional to the prior information. P(A | BC) is the probability for A, given B and C, termed the likelihood function. P(A | C) is the probability for A, regardless whether or not B is true. Finally, P(B | AC) is the posterior probability for B, once the additional information is added to the background knowledge.

For convenience, the A in the denominator on the right hand side of this expression is often resolved into a set of relevant, mutually exclusive (meaning that the probability for more than 1 of them to be true is zero) and exhaustive (they must account for all possible ways that A could be true) 'basis' propositions. A simple example is as AB and AB' (B' denoting "B is false"). This usually allows the denominator to be split up into a sum of terms that can be more easily calculated. For example:

P(A | C) = P(AB + AB' | C)

Applying the extended sum rule (noting again the mutual exclusivity) and the product rule to each of the resulting terms gives:

P(A | C) = P(B | C)P(A | BC) + P(B' | C)P(A | BC)

If desired, we can extend this procedure to 3 'basis' propositions by further resolving AB' into 2 further non-overlapping propositions, and into 4 propositions, and so on, ad infinitum, if wished.

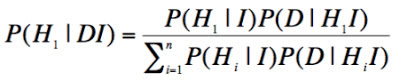

In a typical hypothesis testing application, the hypothesis space includes n hypotheses, (H1, H2, H3, ..., Hn). The background knowledge, consisting of the prior information and some model, is labelled I. Finally the novel information used to update the prior constitutes some set of empirical observations, known as the data, D. Substituting these into the general form, above, gives meaning to the abstract symbols, providing a more user-friendly version of Bayes' theorem:

The Σ in the above formula denotes summation over the entire hypothesis space. In cases where the hypothesis space is continuous, rather than discrete, the summation becomes an integral.

Related Entries:

Related Blog Posts:

The Base Rate Fallacy was my first blog post that introduced Bayes' theorem, providing a common-sense derivation for a problem with two competing hypotheses

How To Make Ad-Hominem Arguments illustrates an instance of testing infinite hypotheses with Bayes' theorem.

External Links:

Bayes, Thomas, "An Essay Towards Solving a Problem in the Doctrine of Chance," Philosophical Transactions of the Royal Society of London 53 (0): 370–418 (1763). Available here.

Back to top

Bernoulli Urn Rule

The Bernoulli Urn rule is a basic principle of sampling theory, related to the probability for an event to occur in an experiment, when there is more than one possible outcome of the experiment corresponding to the event. An example is when rolling a die, the probability to obtain an even numbered face, Which can be achieved in 3 different ways: 2, 4, or 6.

The rule is derived from symmetry principles, as an extension of the principle of indifference, and thus takes as its starting point the condition that all possible outcomes of the experiment are equally likely. If there are R possible ways for statement A to be satisfied by an experiment, and N total possible different outcomes of the experiment, then the desired probability, P(A), is just R divided by N.

Thus, the probability to obtain an even number on rolling a die is 3/6 = 0.5.

The rule is readily justified by applying the extended sum rule to a uniform probability distribution across all possibilities.

Related Entries:

Indifference

Back to top

Binomial Distribution

The binomial distribution is an important probability distribution, specifying the probability for each possible number of outcomes of a given type, in a class of experiments known as sampling with replacement, when there are only two possible types of outcome.

Sampling with replacement means that each sample is drawn from the same population. The probabilities associated with each type of outcome are therefore constant throughout the experiment. One of these probabilities is usually labelled p. From the sum rule, the other probability is 1-p. A typical example of sampling with replacement under such conditions is repeated tossing of a coin.

We can attach arbitrary labels, '0' and '1' to the two types of possible outcome in a Bernoulli trial. Lets say the probability for a '1' is p. (Many authors label this outcome as a 'success', but we don't want to disguise the generality of the formalism.)

A given sequence of n samples, say '00110111', constitutes a conjunction of, in this example, a '0' at the first location, a '0' at the second location, a '1' at the third location, a '1' in the fourth location, etc. The prior probability to have obtained this sequence, from the product rule, is therefore (1-p)(1-p)pp.... or, since there are k '1's and n-k '0's, this probability can be expressed as pk (1-p)n-k.

From the expression just derived, it is clear that the probability with which any particular sequence of k '1's in n trials appears is independent of the order of the sequence. The proposition "k '1's in n trials", however, is a conjunction of all the possible (mutually exclusive) sequences with exactly k '1's, and so the probabilities needed for the binomial distribution are given by the extended sum rule: pk (1-p)n-k must be multiplied by the number of ways to distribute the k '1's over the n trials. The situation is analogous to randomly throwing k pebbles into n (unique) buckets (where k < n), without caring in what order the k buckets are selected. The number of distinct possibilities is the number of combinations, nCk.

The expression for the binomial distribution is therefore:

An example of a binomial probability mass function is plotted, for n = 10, p = 0.3:

Important general properties of the binomial distribution are:

- mean = np

- The peak position is given by different formulae under different circumstances

(1)

|

floor([n+1]p)

|

if (n+1)p is 0 or a noninteger

|

(2)

|

(n+1)p

|

if (n+1)p ∈{1, 2, ..., n}

|

(3)

|

n

|

if (n+1)p = n+1

|

- standard deviation = √np(1-p)

Both the Poisson and normal distributions can be derived as special cases of the binomial distribution. The binomial distribution is itself a special case of the hypergeometric distribution, in the limit where the size of the sampled population is infinite (the 'sampling with replacement' condition).

Related Entries:

Boolean Algebra

Boolean algebra is a mathematical system for investigating binary propositions. Such propositions are represented by Boolean variables, whose values are limited to either 0 or 1, corresponding, respectively, to the proposition being assumed false or true. No Boolean variable can be both 0 and 1.

In the probability theory that I discuss, all propositions about the real world are assumed to be Boolean. They are exclusively either true or false, and never anything in between.

Boolean algebra was introduced by George Boole, in 1854.

The system can be most easily understood as making use of three elementary logical operations. These operations are (other notations exist for these operations):

- negation, denoted NOT(A), for some single proposition, A

- conjunction, denoted AND(A, B), for two propositions A and B

- disjunction, denoted OR(A, B), again for two propositions

All other logical operations can be derived from these three, though they do not represent a minimal set (with cascaded NAND operations (negated ANDs), these three and all others can be formed, though some intuitive appeal is lost).

The AND and OR operations can be generalized to any number of inputs. The AND operation defines a new proposition, which is true only if all the inputs are true. The OR operation returns true if any of the inputs is true. The NOT operation converts 0 to 1 and converts 1 to 0.

Common derived logical operations include NAND (negated AND), NOR (negated OR), XOR (exclusive OR), material equivalence (negated XOR), implication, and logical equivalence.

Several laws exist for the manipulation of Boolean expressions. This list of 20 or so laws on Wikipedia gives a good summary of how logical expressions can be transformed, though axiomatization of Boolean algebra is possible with only a single law (a complicated formula not included in this list).

Using these laws, simplification of complex Boolean expressions is often possible. For example, one of the laws states that AND(A, OR(A, B)) = A, which complies with common sense. If A is true, then 'A or B' is also true, and the expression is true, while if A is false, any expression of the kind 'A and X' is false, so the expression is false.

The process of reducing a logical expression to its simplest form is known as Boolean minimization.

Arithmetic and many forms of logic needed for rationality can be performed using Boolean algebra. For example, 2 numbers expressed in binary form (base 2) can be added using arrays of full adder circuits, which are composed of elements performing the equivalent of AND, OR, and NOT operations.

In digital electronic circuits, Boolean computations are typically performed on inputs using networks of transistors. A circuit that implements a basic Boolean operation is known as a logic gate.

Related Entries:

not

conjunction

disjunction

Related Blog Posts:

The Full Adder Circuit

Back to top

Calibration

Calibration is the process of inferring the relationship between the states of a measuring instrument, and the possible states of nature that cause them.

Often, calibration is considered to consist of finding the condition of nature that is the most likely cause of each available state of the instrument. A more complete calibration process, however, involves inferring for each output state of the instrument, s, a probability distribution, P(c | s I), over the possibly true conditions, c, of reality.

Such a probability distribution encodes useful information such as the amount of uncertainty associated with a measurement (its error bar), and the presence of any systematic bias in the behaviour of a measuring device. Any non-systematic variability of an instrument is termed noise.

Calibration is performed by examining the output of the instrument for a number of standard input conditions, assumed to obey some set of symmetry relationships.

For example, the standard kilogram is a block of metal used for calibration of instruments for measuring mass. Assumed symmetries for this standard include the postulate that the mass of the standard kilogram does not vary with time (something now known to be false).

Calibration standards are usually kept under very highly controlled environmental conditions to reduce the probability for their associated symmetry conditions to be significantly violated.

Symmetries also must be inferred in relation to the behaviour of the instrument, such as the exact correctness of the law of the lever, under all circumstances, when using a weighing balance for the measurement mass.

Because of the need to assume such symmetries, whose validity can only be established using additional instrumentation, itself relying on further assumed principles of conservation, calibration of an instrument is always necessarily probabilistic. This condition is referred to here as the calibration problem. This reliance on supposed symmetry is equivalent to the problem of theory ladenness.

Related Entries:

Noise

Theory Ladenness

Related Blog Posts:

Calibrating An X-ray Spectrometer - First Steps

Calibrating An X-ray Spectrometer - Spectral Distortion

The Calibration Problem: Why Science Is Not Deductive

Back to top

Combination

A combination is a sampling of k objects from a population of n objects, for which the order of the drawn objects is undefined or irrelevant. Each ordering of any particular k objects is an instance of the same combination.

The notation for the number of possible combinations given n and k is nCk. An alternative notation consists of a pair of round brackets, containing the number n positioned directly above the number k, as shown below.

Because each combination consists of several orderings of objects, the number of possible combinations for any (n, k) is less than the number of permutations. For a sample of k objects, there are k! possible orderings, meaning that the number of possible combinations is the number of possible permutations, divided by k!:

The above expression is termed a binomial coefficient.

The counting of combinations constitutes one of two indispensable capabilities, (the other being the counting of permutations) in the calculation of sampling distributions. Sampling distributions are themselves of vital importance in the making of predictions (so called 'forward' probabilities) and in the calculation of the likelihood functions used in hypothesis testing (so called 'inverse' problems).

Related Entries:

Confidence Interval

A confidence interval (C.I.) is a region in which the true value of some estimated parameter is inferred with high confidence to reside.

Since confidence intervals relate to degrees of belief, it seems obvious that their determination should depend on the calculation of probabilities. Furthermore, since rationality demands that all relevant available information be taken into account when quantifying degrees of belief, it follows that a confidence interval should be derived from a posterior distribution.

Unfortunately, the standard definition of a confidence interval does not insist on this. Consequently, many confidence intervals do not do exactly what was expected or hoped for by their architects, sometimes to the point of delivering grossly misleading impressions.

A great deal of confusion results from the standard definition of confidence intervals, which has led me to make use of my own unconventional definition, which is guaranteed to do what every confidence interval should:

Important note:

This is not the standard definition of a confidence interval.

My definition complies with the standard definition, though, (all C.I.s obtainable using my criterion constitute a subset of all intervals compatible with the orthodox definition), while narrowing it in a way that ensures that the resulting intervals will have all the properties that a confidence interval rationally should have. For example, my C.I.s have the property that a wider confidence interval always results from a less precise measurement. This is not guaranteed under the standard nomenclature (see my blog post, and literature cited there).

In a one-dimensional parameter estimation problem, such an interval would be a line segment, (L, U), specified in terms of lower (L) and upper (U) confidence limits. Such a pair of limits specifies a convenient form of error bar.

In a two-dimensional case, the confidence interval will be the area bounded by some closed curve. For a bivariate normal distribution, for example, the confidence interval will be an ellipse.

Note that even having specified X, and having determined a posterior distribution, there are many possible confidence intervals. In 1D, for example, one can imagine obtaining a C.I. centered on the mean of the distribution, and then obtaining another C.I. by reducing both L and U by small amounts, such that the enclosed posterior density remains the same.

Normally, one is interested in the narrowest possible interval, containing some point estimate of the parameter (e.g. expectation, or median).

A confidence interval (C.I.) is a region in which the true value of some estimated parameter is inferred with high confidence to reside.

Since confidence intervals relate to degrees of belief, it seems obvious that their determination should depend on the calculation of probabilities. Furthermore, since rationality demands that all relevant available information be taken into account when quantifying degrees of belief, it follows that a confidence interval should be derived from a posterior distribution.

Unfortunately, the standard definition of a confidence interval does not insist on this. Consequently, many confidence intervals do not do exactly what was expected or hoped for by their architects, sometimes to the point of delivering grossly misleading impressions.

A great deal of confusion results from the standard definition of confidence intervals, which has led me to make use of my own unconventional definition, which is guaranteed to do what every confidence interval should:

An X% confidence interval is a connected subset of the hypothesis space that has a posterior probability of X to contain the true state of the world.

Important note:

This is not the standard definition of a confidence interval.

My definition complies with the standard definition, though, (all C.I.s obtainable using my criterion constitute a subset of all intervals compatible with the orthodox definition), while narrowing it in a way that ensures that the resulting intervals will have all the properties that a confidence interval rationally should have. For example, my C.I.s have the property that a wider confidence interval always results from a less precise measurement. This is not guaranteed under the standard nomenclature (see my blog post, and literature cited there).

In a one-dimensional parameter estimation problem, such an interval would be a line segment, (L, U), specified in terms of lower (L) and upper (U) confidence limits. Such a pair of limits specifies a convenient form of error bar.

In a two-dimensional case, the confidence interval will be the area bounded by some closed curve. For a bivariate normal distribution, for example, the confidence interval will be an ellipse.

Note that even having specified X, and having determined a posterior distribution, there are many possible confidence intervals. In 1D, for example, one can imagine obtaining a C.I. centered on the mean of the distribution, and then obtaining another C.I. by reducing both L and U by small amounts, such that the enclosed posterior density remains the same.

Normally, one is interested in the narrowest possible interval, containing some point estimate of the parameter (e.g. expectation, or median).

Related Entries:

Back to top

Conjunction

A conjunction is a logical proposition asserting that two or more sub-propositions (e.g. X and Y) are both true. Different ways of denoting such a proposition are 'XY', 'X.Y', and 'X∧Y'. These notations can be read as 'X and Y are true'. Sometimes when expressing probabilities, a comma is used to express a conjunction, as in P(X,Y), or P(Z | X,Y). The probability associated with a conjunction is obtained from the product rule, a basic theorem of probability theory.

Using a 1 to represent 'true' and a zero to represent 'false', the truth table for the conjunction of X and Y is as shown below. For each of the possible combinations of values for X and Y (left-hand and centre columns), the truth of the conjunction is shown (right-hand column). As indicated, X.Y is only true when X is true and Y is true:

A conjunction is a logical proposition asserting that two or more sub-propositions (e.g. X and Y) are both true. Different ways of denoting such a proposition are 'XY', 'X.Y', and 'X∧Y'. These notations can be read as 'X and Y are true'. Sometimes when expressing probabilities, a comma is used to express a conjunction, as in P(X,Y), or P(Z | X,Y). The probability associated with a conjunction is obtained from the product rule, a basic theorem of probability theory.

Using a 1 to represent 'true' and a zero to represent 'false', the truth table for the conjunction of X and Y is as shown below. For each of the possible combinations of values for X and Y (left-hand and centre columns), the truth of the conjunction is shown (right-hand column). As indicated, X.Y is only true when X is true and Y is true:

| X | Y | X.Y |

| 0 | 0 |

0

|

| 0 | 1 |

0

|

| 1 | 0 |

0

|

| 1 | 1 |

1

|

Related Entries:

Back to top

Consequentialism

Consequentialism is a branch of moral philosophy, in which it is believed that only the expected results of one's actions determine whether one's actions are optimal.

This contrasts most strongly with absolutism, which asserts that there exist absolute principles of morality that hold, irrespective of the consequences of one's actions.

Consequentialism (or a subset of it, at least) can be shown to be the only correct point of view, by making use of simple statements that follow automatically from an uncontroversial understanding of the meaning of the word 'good,' as explained in the blog post, Scientific Morality. These arguments are further built upon in another post, Crime and Punishment.

The consequentialism that I defend is distinct from 'actual consequentialism,' which is the belief that only actual consequences determine whether a person's behaviour is moral. This position is indefensible, however, as it allows no way to distinguish between an action based on sound reasoning from high-quality evidence, that nonetheless fails to produce a good outcome, and an action based on willful ignorance and irrationality, that also fails. Coherence demands, however, that is is better to be wrong for good reasons, than to be equally wrong for bad reasons (my blog post, Is Rationality Desirable?, goes into far greater depth than this hand waving argument). Thus, an action is morally better than another if its outcome is expected, under reliable reasoning, to be better than the outcome of the other action.

An action with optimal results, therefore, may not be morally better than another with sub-optimal results, if, for example, prior to the event only an idiot would have predicted a good outcome.

An action with optimal results, therefore, may not be morally better than another with sub-optimal results, if, for example, prior to the event only an idiot would have predicted a good outcome.

Conventional thought finds difficulty reaching the conclusion that uniquely true assessments of value exist as matters of fact, and hence that consequences can be objectively classified as better or worse, but since value is a property of conscious experience, there can be no doubt such a thing exists, and hence that statements about value are either true or false. The quality of an assessment of value, though, obviously depends on the quality of our moral science.

Related Entries:

Related Blog Posts:

Back to top

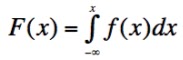

Cumulative Distribution Function

The cumulative distribution function, or CDF, F(x), is the integral of a probability distribution function, f(x), from -∞ to x.

From the extended sum rule, it is clear that this corresponds to the probability that any one of the hypotheses in the region from X = -∞ to X = x is true:

F(x) = P(X ≤ x)

From the definition of the definite integral, it is clear that the probability associated with a composite hypothesis, a < x ≤ b, is given by

P(a < x ≤ b) = F(b) - F(a)

As an example of the CDF, the plot below shows the CDF (red curve) of a normal distribution (blue curve). Note that the two curves are plotted on different scales - in reality, the CDF is at all points higher then the PDF.

The complement of the CDF is termed the survival function, and the inverse of the CDF is termed the quantile function.

Related Entries:

Derivative

The derivative of a function of x, y = f(x), at some point, x0, is loosely defined as the rate of change of the function at x0. More precisely, it is the slope of the tangent line to the curve traced by f(x) at that point (assuming that f(x) is differentiable). (A tangent line is a line touching the curve at exactly one point.)

Three common notations for a derivative are dy/dx, f'(x), and y'.

Imagine two points on the curve, f(x): one at x0, and the other positioned a distance h, further down the x-axis. The slope of the line through these two points is:

As h gets smaller and smaller, we get closer to obtaining the slope of the tangent in the definition of the derivative. Mathematically, we take the limit of the above formula, as h gets arbitrarily close to, but not equal to zero:

Using this expression, certain standard derivatives have been worked out, and some of the common ones are summarized in the table below.

For example, using the results for powers of x, and for a sum of functions, the derivative of a polynomial function such as the cubic function, y = 2x3 - 2x2 - 6x + 10, can be easily worked out by formula. The graph below shows this function (blue curve). The formula for the derivative, dy/dx, is worked out from the rules in the table (items 1, 2, and 6), and is included on the graph. Choosing some value of x (x = -1, as it happens), the derivative at that location is found from the formula for dy/dx to be 4, and the corresponding y value (y = 12) is obtained from the original formula for the function. With the x-value, the y-value, and the slope of the tangent line (dy/dx), the tangent line is completely specified, and is also plotted (red line):

As long as a curve, f(x), is smoothly varying, the derivative can be found at all x positions on the curve using the above procedure, or using some numerical approximation. Numerical approximation of dy/dx consists of computing Δy/Δx for two points separated, in x coordinate, by some very small distance, Δx.

The process of calculating a derivative is termed differentiation.

The derivative is one of two fundamentally important concepts in the mathematical discipline known as calculus. The other concept is the integral. The fundamental theorem of calculus relates derivatives to integrals. Approximately speaking, taking the integral of a derivative returns the original function, and vice versa, taking the derivative of an integral returns the original function.

A turning point of a function, where the function passes through a local peak or trough, is characterized by the slope of the function changing from positive to negative, or vice versa. At the turning point, therefore the derivative is zero. Solving for the roots of f'(x) thus give the turning points of f(x).

Table of standard derivatives:

Item

|

Situation

|

y = f(x)

|

f'(x)

|

a constant |

c

|

0

| |

A sum of functions |

u(x) + v(x)

|

u'(x) + v'(x)

| |

3 | A product of functions |

u(x)v(x)

|

u(x)v'(x) + v(x)u'(x)

|

Quotient of functions | |||

Chain rule

(see note below) |

y = g(u), u = h(x)

| ||

Powers of x |

cxn

|

cnx(n-1)

| |

Exponential |

ekx

|

k ekx

| |

Number raised to the power x |

ax

|

ax ln(a)

| |

Natural logarithm |

ln(x)

| ||

Other logarithms |

loga(x)

| ||

Sine |

sin(x)

|

cos(x)

| |

Cosine |

cos(x)

|

-sin(x)

| |

Tangent |

tan(x)

|

sec2(x)

| |

Secant |

sec(x)

|

sec(x)tan(x)

| |

Cosecant |

cosec(x)

|

-cosec(x)cotan(x)

| |

Hyperbolic sine |

sinh(x)

|

cosh(x)

| |

Hypoerbolic cosine |

cosh(h)

|

sinh(x)

| |

Arc sine |

sin-1(x)

| ||

Arc cosine |

cos-1(x)

| ||

Arc tangent |

tan-1(x)

| ||

Arc hypoerbolic sine |

sinh-1(x)

| ||

Arc hypoerbolic cosine |

cosh-1(x)

| ||

Arc hypoerbolic tangent |

tanh-1(x)

|

Of particular note in the table is item 5, the chain rule. Using the chain rule, composite functions built out of several of the above standard functions can be differentiated. For example, item 8 gives the derivative of a constant raised to the power of x. A function, g(u) = ah(x), consisting of a constant raised to the power of a function of x is differentiated by setting u equal to the exponent, h(x), and taking the product of two standard derivatives, exactly as prescribed by the above formulation of the chain rule. Higher-order nesting of functions can be attacked by applying the chain rule repeatedly.

Related Entries:

Disjunction

A disjunction is a logical proposition asserting that at least one of two or more sub-propositions is true. Up to all the sub-propositions referred to by a disjunction may be true, for the disjunction to hold (this is distinct from the logical XOR operation - exclusive or - which asserts that exactly one of the sub-propositions is true). For two propositions, X and Y, the disjunction is typically written 'X+Y', which is read 'X or Y'. Another notation is 'X∨Y'.

A disjunction is a logical proposition asserting that at least one of two or more sub-propositions is true. Up to all the sub-propositions referred to by a disjunction may be true, for the disjunction to hold (this is distinct from the logical XOR operation - exclusive or - which asserts that exactly one of the sub-propositions is true). For two propositions, X and Y, the disjunction is typically written 'X+Y', which is read 'X or Y'. Another notation is 'X∨Y'.

The extended sum rule, a basic theorem of probability theory specifies the probability for a disjunction, P(A+B).

Using a 1 to represent 'true' and a zero to represent 'false', the truth table for the disjunction, X+Y, is as shown below. For each of the possible combinations of values for X and Y (left-hand and centre columns), the truth of the disjunction is shown (right-hand column). As indicated, X+Y is only false when X is false and Y is false:

Using a 1 to represent 'true' and a zero to represent 'false', the truth table for the disjunction, X+Y, is as shown below. For each of the possible combinations of values for X and Y (left-hand and centre columns), the truth of the disjunction is shown (right-hand column). As indicated, X+Y is only false when X is false and Y is false:

| X | Y | X+Y |

| 0 | 0 |

0

|

| 0 | 1 |

1

|

| 1 | 0 |

1

|

| 1 | 1 |

1

|

Related Entries:

Conjunction

Extended sum rule

Back to top

Effect Size

Effect size is a measure of the distance between two probability distributions over similar hypothesis spaces. It is used to quantify the magnitude of an effect.

For example, if farmers found that wearing pink T-shirts made potatoes grow larger, a crude measure of effect size would be the difference between the average size of a potato grown by a pink T-shirt wearing farmer and a non pink T-shirt wearing farmer. The probability distributions in question concern the size of a randomly sampled potato, and the continuous hypothesis space is e.g. potato mass.

More usually, measures of effect size are standardized to account for the width of the effect-free probability distribution. Thus, staying with the potato example, if the mass of a randomly sampled potato, grown by a non pink T-shirt wearer, is 500 g ± 200 g, an increase to only 520 g average mass for the pink T-shirt wearer represents only a small effect size. Many of the treated cases will be smaller than many of the untreated cases, and if wearing a pink T-shirt happens to be costly, it may not be economical to apply this treatment, even though it increases yield.

The are many measures of effect size, but an unqualified use of the term usually refers to the difference between the means for the with-effect and without-effect cases, divided by the standard deviation (assuming this is the same for both cases).

Back to top

Evidence

Evidence is any empirical data that when logically analyzed results in some change to the assessment of the reliability of some hypothesis.

When measured in decibels, the evidence for a proposition, A, is given in terms of the logarithm of the odds for A, O(A):

E(A) = 10 × log10(O(A))

When the evidence is 0 dB, therefore, the proposition A is equally as likely as its compliment, A'. Evidence amounting to about 30 dB corresponds to P(A) = 0.999. Not surprisingly, a probability of 0.001 corresponds to evidence of about -30 dB (E(A) = -E(A')).

Rather than quantifying the totality of evidence for A (which demands specification of a prior), it is possible to give the amount of change of evidence supported by some set of data. This change of evidence is also termed 'evidence'. It is calculated by replacing the odds, O(A), in the above formula, by the ratio of the likelihood functions for A and its compliment, A'. Because the evidence is a logarithmic function, the posterior evidence is given simply as the sum of the prior evidence and this relative evidence associated with the data.

Some authors claim that the use of the base 10 and the factor of 10 give a general psychological advantage when it comes to appreciating and interpreting evidence quantified in dB.

Related Entries:

Odds

Likelihood Function

Back to top

Expectation

The expectation of a distribution is the centre of mass of that distribution. It is one of the most common measures of the location of a probability distribution. Other common terms for the expectation are the mean and the average.

If a probability distribution describes the likely outcomes when sampling a random variable, X, then the expectation is denoted with angled brackets, thus:

μx = 〈X〉

Under many circumstances, (corresponding to a common class of utility function)〈X〉is considered to be our best possible guess for the value of a sample from this distribution, x (i.e. an instance of the random variable, X).

For a discrete distribution, the expectation is calculated using the following formula:

The following formula is used for a continuous distribution:

The motivation for these formulas is described in another entry on the concept of mean, together with discussion of their interpretation and important distinctions between the expectation and other measures of location.

Related Entries:

mean

Related Blog Posts:

Great Expectations discusses further properties of expectations.

Back to top

The extended sum rule is a basic theorem of probability theory, specifying the probability associated with a disjunction, A+B.

The rule is derived easily from the sum and product rules, and is written:

P(A+B | C) = P(A | C) + P(B | C) - P(AB | C)

Often, for convenience, a proposition will be resolved into a disjunction of mutually exclusive 'basis' propositions, in which case the probability associated with the conjunction, AB, is zero, and the rule simplifies.

The rationale behind the extended sum rule is intuitively appreciated by drawing a Venn diagram to represent the sample space, consisting of 2 interlocking circles, one for A and the other for B (see figure). We imagine the areas of the circles to be proportional to P(A) and P(B) respectively. The region of overlap is thus proportional to P(AB). The sum, P(A) + P(B), therefore, counts the overlap P(AB) twice, so to determine P(A+B), this overlap needs to be subtracted.

By drawing the appropriate truth table, we can easily confirm that the negation of the disjunction A+B is the same proposition as A'.B', "not A and not B" (note that A'.B' and (A.B)' are not the same):

Applying the sum rule (SR) and inserting this substitution, and then also applying the product rule (PR), we can proceed to towards our goal:

From the last line, above, we simply multiply out the terms and apply the product rule one final time to arrive at the extended sum rule:

The extended sum rule is one of three basic theorems of probability theory, the other two being the product rule and the sum rule.

| A | B | A+B | A'.B' |

| 0 | 0 |

0

| 1 |

| 0 | 1 |

1

| 0 |

| 1 | 0 |

1

|

0

|

| 1 | 1 |

1

|

0

|

Applying the sum rule (SR) and inserting this substitution, and then also applying the product rule (PR), we can proceed to towards our goal:

| P(A+B | C) | = 1 - P(A'.B' | C) | .... | (SR) |

| = 1 - P(A' | C) × P(B' | A'C) |

....

| (PR) | |

| = 1 - P(A' | C) × [1 - P(B | A'C)] |

....

| (SR) | |

| = P(A | C) + P(A'.B | C) |

....

|

(SR & PR)

| |

| = P(A | C) + P(B | C) × P(A' | BC) |

....

|

(PR)

| |

| = P(A | C) + P(B | C) × [1 - P(A | BC)] |

....

|

(SR)

| |

P(A+B | C) = P(A | C) + P(B | C) - P(AB | C)

In the case of disjoint propositions, the extended sum rule is easily generalized to more than 2 propositions. Suppose we start with exclusive propositions A1 and B. Then we have:

P(A1+B | C) = P(A1 | C) + P(B | C)

Now, we recognise that B actually can arise in either of two possible ways, thus,

B = A2+ A3

Consequently, P(B | C) = P(A2 | C) + P(A3 | C), and the disjunction of these 3 events is

P(A1+A2+A3| C) = P(A1 | C) + P(A2 | C) + P(A3 | C)

This process could be extended, for example, by further resolving A3 into a durther conjunction, and so on.

Other, more general, generalizations are possible.

Related Entries:

Conjunction

Disjunction

Back to top

False Negative Rate

The false negative rate, often denoted as β, is the probability that an instrument will yield 'false' as output, given that the state of reality (with respect to the question posed to the instrument) is 'true'.

The false negative rate is closely analogous to the false positive rate, and further explanation of the concept can be found under that entry.

False negatives are also known as 'type II errors.'

The false negative rate is the frequency with which false negatives occur, relative to the total frequency with which the state of reality is 'true'. Thus, stated as a formula, the false negative rate is:

Since the sensitivity of a test is the probability that the instrument will infer 'true,' given that the state of reality is 'true,' another formula for the false positive rate is:

Related Entries:

False Positive Rate

Sensitivity

Specificity

Back to top

False Positive Rate

The false positive rate, often denoted as α, is the probability that an instrument will yield 'true' as output, given that the state of reality (with respect to the question posed to the instrument) is 'false'.

Sometimes, an instrument must provide an output that is binary: does the subject have the disease or not? Should the fire alarm be activated or not? Was that small region of the hard drive encoded with a 0 or a 1? Etc.

The output of the instrument must be either positive or negative. On the other hand, the state of reality, with respect to the current question, must be either 'true' or 'false'. These two categories are not always the same (one refers to the state of the instrument, and the other refers to the system that the instrument is trying to diagnose).

A false positive, therefore, is an instance where the instrument outputs 'positive,' i.e. infers reality's state to be 'true,' even though reality's state is actually 'false.' False positives are also termed 'type I errors.' We need to be careful with the terminology, however - the 'false' in 'false positive,' refers not to the state of reality, but to the correctness of the instrument's diagnosis. Thus, a true negative is a case where the instrument infers reality's state to be 'false', and this inference is correct.

The false positive rate is, therefore, the frequency with which false positives occur, relative to the total frequency with which the state of reality is 'false'. Thus, stated as a formula, the false positive rate is:

Since the specificity of a test is the probability that the instrument will infer 'false,' given that the state of reality is 'false,' another formula for the false positive rate is:

In general, designing an instrument involves choosing an appropriate trade-off between false positive rate and false negative rate, as decreasing one is most easy to do by increasing the other.

Related Entries:

False Negative Rate

Sensitivity

Specificity

Back to top

Falsifiability Principle

The falsifiability principle asserts that in order for a proposition to be scientific, and suitable for scientific investigation, it must be vulnerable to falsification.

The principle was originated by Karl Popper, and is more commonly known as the falsification principle. At Maximum Entropy, however, the term falsifiability is preferred as it seems more precise, the traditional term being often misinterpreted, by scientists and 'lay people' alike, to imply that the purpose of science is to falsify theories. Correctly interpreted, falsifiability is to be seen as a demarcation criterion, dividing propositions into those that are useful and those that aren't.

Relatedly, Popper believed that falsification was the only way for knowledge to advance, but this is incompatible with a rational view point, as, in light of new information, probabilities may be altered upwards or downwards, and in increments almost always less than those required to reach maximal certainty.

The falsifiability criterion can be understood in terms of the likelihood function associated with some data set, D. If a theory, H, is unfalsifiable then the likelihood, P(D | HI), will always be high (relative to the peak of the likelihood), regardless what data have been observed. No useful predictions are possible with such a theory. Assigning a high probability to H, therefore, teaches us nothing about nature, and the theory is useless. The term 'pseudoscience' is often used for such theories.

Unfalsifiable theories also necessarily possess infinite degrees of freedom (an unlimited number of fitting parameters), which I argue necessarily assigns to them strictly zero prior probability. Unfalsifiable theories are not, therefore, untestable, as many assume. One only needs to postulate the existence of an alternate hypothesis with finite degrees of freedom (which is always a sound premise) in order to automatically assign a probability of zero to such theories, making them the most readily testable propositions going around.

Related Entries:

Related Blog Posts:

Bayes' Theorem: All You Need to Know About Theology describes the impact of the infinite degrees of freedom of unfalsifiable theories

Inductive inference or deductive falsification? shows that falsification is just another bog-standard part of inductive inference

Back to top

Frequency Interpretation

The frequency interpretation of probability is a popular but severely flawed understanding of the meaning of probabilities. According to the frequency interpretation, each probability corresponds to a physical frequency. This means that the probability associated with some event is the same as the relative frequency with which that event will occur in some infinite set of physical experiments.

For example, in a coin tossing experiment the relative frequency with which the coin lands with the head facing up might be 0.5, which, typically would also be the probability, P(head). In this case, the frequency and the probability are the same.

The correspondence breaks down, however, in several trivial ways. In the coin tossing example, before any trials have been performed, and with no pertinent information to the contrary, symmetry demands that I assign 50% probability to the occurrence of a head. The physical coin, however, along with the tossing procedure, may be biased to produce some different frequency of outcomes, and so the probability is more properly seen not as a physical frequency, but as a statement of one's available knowledge about the experiment.

The frequency interpretation breaks down even more dramatically when considering the probability for propositions such as "the universe is between 13.7 and 13.9 billion years old." There is no sense in which the truth of such statements can vary with any frequency.

Some have tried to rescue the frequency interpretation by arguing that among the set of all hypotheses for which the probability is x, the fraction that are true is x, and that x is therefore a physical frequency. This, however, makes some important errors.

Firstly, every probability is determined, assuming the truth of some model, and can not be divorced from that model. It is trivial for me to test the same hypothesis within two separate models, arriving at two different probabilities. The proposed, rescued frequency interpretation, however, is powerless to say which probability is the correct physical frequency.

Secondly, given what we know about the procedures used to generate hypotheses, it is surely the normal thing for our theories to be false, no matter what probability they attain. For a long time, for example, it appeared that the universe obeyed Newtonian mechanics. Physics was then a matter of solving certain equations, with the numerical results of this procedure evidently attaining high probability. We now know (with high confidence) that reality is not Newtonian, and that all these results were, in fact, false. As false, in fact, as the results of stone-age divination techniques, though not equally wrong, of course. The falsification of Newtonian physics was a normal part of science, and there is no valid reason to expect this kind of thing to stop any time soon. A probability, therefore, is no more than an expected frequency, assuming the truth of some model. It is, consequently, not a physical parameter, but an imagined entity.

Adherents of the frequency interpretation are known as frequentists.

Traditional favourite techniques of frequentists most notably include null-hypothesis significance testing and maximum likelihood parameter estimation, though each of these, sadly, commits the base-rate fallacy, resulting in limited validity.

Traditional favourite techniques of frequentists most notably include null-hypothesis significance testing and maximum likelihood parameter estimation, though each of these, sadly, commits the base-rate fallacy, resulting in limited validity.

Related Entries:

Related Blog Posts:

The Mind Projection Fallacy

Parameter Estimation and the Relativity of Wrong

Insignificance of Significance Tests (discusses the flaws inherent in null-hypothesis significance testing)

Fly Papers and Photon Detectors (illustrates the breakdown of maximum-likelihood parameter estimation)

Parameter Estimation and the Relativity of Wrong

Insignificance of Significance Tests (discusses the flaws inherent in null-hypothesis significance testing)

Fly Papers and Photon Detectors (illustrates the breakdown of maximum-likelihood parameter estimation)

Gaussian Distribution

Another name for the normal distribution.

Related Entries:

Hypothesis Test

A hypothesis test is a procedure for ranking the reliability of propositions about the real world by performing computations on some data set.

This is a more general definition than some specialists use, as some reserve the phrase 'hypothesis test' to refer to null-hypothesis significance tests. On Maximum Entropy, however, NHSTs are considered a special case, just one of many special-case classes of procedures, whose ultimate purpose is to mimic with varying success the output of Bayes' Theorem.

Null-hypothesis significance tests examine only a single hypothesis (the null hypothesis), and provide binary output (rejection of or failure to reject the null hypothesis).

Hypothesis tests based directly on Bayes' theorem offer a continuous range of outputs, from 0 to 1, constituting a posterior probability assignment. They can assess any integer number of hypotheses from 2 (binary hypothesis tests) up, and using differential calculus, can be applied to problems with infinite numbers of hypotheses.

Important classes of Bayesian hypothesis test are parameter estimation and model comparison.

Null-hypothesis significance tests examine only a single hypothesis (the null hypothesis), and provide binary output (rejection of or failure to reject the null hypothesis).

Hypothesis tests based directly on Bayes' theorem offer a continuous range of outputs, from 0 to 1, constituting a posterior probability assignment. They can assess any integer number of hypotheses from 2 (binary hypothesis tests) up, and using differential calculus, can be applied to problems with infinite numbers of hypotheses.

Important classes of Bayesian hypothesis test are parameter estimation and model comparison.

Related Entries:

Bayes' Theorem

null-hypothesis significance tests

parameter estimation

model comparison

posterior probability

null-hypothesis significance tests

parameter estimation

model comparison

posterior probability

Related Blog Posts:

The Base Rate Fallacy introduces binary hypothesis testing

How To Make Ad Hominem Arguments introduces infinite hypothesis testing

Parameter Estimation and the Relativity of Wrong concerns parameter estimation

Ockham's Razor discusses model comparison

How To Make Ad Hominem Arguments introduces infinite hypothesis testing

Parameter Estimation and the Relativity of Wrong concerns parameter estimation

Ockham's Razor discusses model comparison

Indifference

Indifference is one of a class of symmetry principles used to specify prior probability distributions.

Such principles derive from the basic desideratum that equivalent states of information lead to the same probability assignment.

The principle of indifference can be applied to all discrete distributions, under the circumstances of 'complete ignorance.' Complete ignorance implies that there is insufficient reason to favor one hypothesis over any other, and so symmetry demands that each hypothesis is assigned equal probability. Thus, when there are n hypotheses, the prior distribution from indifference is uniform, and from the normalization requirement, is equal to 1/n, for all hypotheses.

Under strict Bayesian reasoning, complete ignorance holds for the first iteration of Bayes' theorem. We might be told by a trusted source, for example, that a particular non-uniform prior is appropriate. In such a case, however, before the problem can be evaluated, rationality demands a necessary pre-evaluation of the probability that the source of the information is trustworthy. Such calculations are most often performed approximately and subconsciously.

Not all continuous distributions can be analyzed by the principle of indifference for the condition of maximal ignorance. This is because the resulting distribution is not generally invariant under transformations of the parameter space.

For example, if we are told that a square has sides of length between 5 and 10 cm, we might consider all lengths in this range to be equally probable. The given prior information, however, is equivalent to the statement that the area of the square is between 25 and 100 cm2, suggesting just as much that all areas in this range are equally likely. The second distribution, unfortunately, is not equivalent to the first. Since there is no compelling reason to give precedence to either the length or the area, some other method, such as the principle of transformation groups, must be used instead to determine the appropriate ignorance prior.

The principle of indifference can also be derived from the principle of maximum entropy, as shown in this blog post.

Related Entries:

Principle of Transformation Groups

Related Blog Posts:

Integral

The indefinite integral,

∫ F(x) dx

gives the area bounded by the function, F(x), and the x-axis. The process of calculating an integral is termed integration. The function F(x) is termed the integrand.

The integral is one of two fundamentally important concepts in the mathematical discipline known as calculus. The other concept is the derivative. The fundamental theorem of calculus relates derivatives to integrals. Approximately speaking, taking the integral of a derivative returns the original function, and vice versa, taking the derivative of an integral returns the original function.

Setting the above integral, therefore equal to a function, f(x), then F(x) = f '(x) satisfies the equation. Substituting the function f(x) + c for f(x), however, also satisfies this condition, as the derivative of a constant is zero. Thus, the general solution of the above integral is some function of x, plus some unknown constant:

∫ F(x) dx = f(x) + c

In some problems, the constant of integration, c, can be determined from boundary conditions.

A selection of common integrals, obtained from first principles, are provided in the table below.

The definite integral,

gives the area bounded by the curve, F(x), the x-axis, and the lower and upper limits, a and b, respectively. When the indefinite integral is given as f(x) + c, the above definite integral is therefore given as

For example, the figure below illustrates the definite integral between limits a = -1 and b = 2 for the cubic polynomial, y = 2x3 - 2x2 - 6x + 10. The integral corresponds to the shaded area:

From the table (items 1, 3, and 4), the indefinite integral for such a polynomial can be easily obtained by formula, and is

(Note that we can apply rules 1, 2, and 6 from the table of derivatives to the above result and quickly verify that the function, y = 2x3 - 2x2 - 6x + 10 is the result.) For the limits specified, the definite integral illustrated comes to exactly 22.5.

Not all functions, F(x), have an integral that can be expressed exactly. In such cases, the integral may still be evaluated approximately, using numerical techniques. The simplest form of numerical integration is to divide the area required into a series of narrow rectangles, whose individual areas are trivial to calculate. The width of each rectangle is some small increment, Δx, and the height is given by evaluating F(x) at the centre of the interval, at x + Δx/2. The process is illustrated using the same cubic polynomial:

With only 12 intervals, the total sum over all rectangles comes to 22.48, differing from the exact result by less than 0.1%.

Table of Standard Integrals:

In the following, the solution to ∫ F(x) dx is of the form f(x) + c. The constant of integration is omitted from the table.

Item

|

Situation

|

Integrand, F(x)

|

Integral,

f(x)

|

Sum of functions

|

g(x) + h(x)

| ||

A constant multiplying a function

|

a g(x)

| ||

A constant

|

a

|

a x

| |

x raised to some power

|

xn

| ||

1 divided by x

|

ln(|x|)

| ||

Exponential function

|

ex

|

ex

| |

A constant raised to the power x

|

ax

| ||

Sine function

|

sin(x)

|

-cos(x)

| |

Cosine function

|

cos(x)

|

sin(x)

| |

Tangent

|

tan(x)

|

-ln(cos(x))

| |

Arc sine

|

sin-1(x)

| ||

Arc cosine

|

cos-1(x)

| ||

Arc tangent

|

tan-1(x)

| ||

Reciprocal root of a quadratic, 1

| |||

Reciprocal root of a quadratic, 2

| |||

Reciprocal quadratic, 1

| |||

Reciprocal quadratic, 2

|

Many more standard integrals exist, see for example.

Techniques also exist for composite functions, such as integration by substitution (less technical introduction here), which is analogous to the chain rule for derivatives, for some functions of functions, and integration by parts for some products of functions.

Related Entries:

A joint probability is the probability associated with a conjunction. It is the probability that two or more propositions are true at the same time. A joint probability distribution gives a multi-dimensional probability density over several variables. For example, if we are interested in the current temperatures in London and New York, our relevant information could be expressed as a two-dimensional joint probability distribution, with each point in the 2D hypothesis space corresponding to a particular combination of temperatures: TL = X and TNY = Y.

An important example of a joint probability distribution is the multi-variate normal distribution.

Likelihood Function

The likelihood function gives the probability to have observed some data set, conditional upon some hypothesis, and a collection of background information, I. It is a function of the hypothesis, H, and not the data, D:

L(H) = P(D | HI)

When P(D | HI) is examined as a function of D, it is termed the sampling distribution, but it must be remembered that this is a different function, over a different domain. In particular, it is worth noting that because the likelihood function varies over the conditioning assumption, H, it is not a probability distribution function, and therefore is not normalized.

Often, the hypotheses of concern relate to the numerical values of some model parameters, θ = (θ1, θ2, θ3,...), and it's not uncommon to see the likelihood function denoted as L(θ).

The likelihood function plays an important role in Bayesian inference, and in maximum-likelihood parameter estimation.

The base-rate fallacy is a common mistake whereby inferences are drawn by looking only at the likelihood function (or something closely related), and not including the prior probability, as Bayes' theorem specifies. Under circumstances where the prior distribution is close to uniform, however, this kind of approximate reasoning may be sufficiently accurate.

Related Entries:

Marginal Distribution

A marginal distribution is a probability distribution over a parameter space of reduced dimensions. For example, if a parameter space ranges over x and y, the corresponding probability distribution is P(x, y | I). We may, however, only be interested in the probabilities associated with the various values for one of the parameters, x. The distribution of interest, P(x | I), is said to be marginalized over y. The parameter, y, is often termed a nuisance parameter.

The marginal distribution for x is given by:

The marginal distribution for x is given by:

The method required to calculate a marginal distribution arises fairly simply from the basic rules of probability. Suppose that we divide the entire parameter space over y into two non-overlapping regions, y1 and yA. Since these are the only possibilities in y-space, then the proposition, 'x' (our shorthand for 'X = x', where x is an event, and X is the random process generating that event) is logically the same as the proposition 'x and y1 or yA'. In symbols:

x ≡ x.[y1 + yA]

The probabilities for these must therefore be the same:

P(x | I) = P(x.[y1 + yA] | I)

= P(x.y1 + x.yA | I)

Employing the extended sum rule, and the fact that y1 and yA are disjoint (exclusive),

P(x | I) = P(x.y1 | I) + P(x.yA | I)

Next the product rule is invoked, yielding

P(x | I) = P(x | y1.I)P(y1| I) + P(x.yA | I)

If, however, we feel that two points is not sufficient to resolve all the possibilities in y-space, we can further resolve yA into two further propositions: y2 and yB. Thus, the last term in the above sum becomes

P(x.yA | I) = P(x.[y2 + yB] | I)

which can again be split up into a sum of 2 terms, exactly as was just done. This process can go on indefinitely, yielding a string of terms, one for each of the propositions, y1, y2, y3, y4, ... , yielding a final marginalized distribution,

In the most general case the parameter space over y will be continuous, and we can better represent the marginal distribution as an integral, where P(x) now represents a probability density:

Because P(.) is now being used to represent a probability density, with units inverse to those of the parameter space, the product P(y | I)×dy is dimensionless, and P(x | I) has the same units as P(x | y, I).

The process of marginalization can be extended to any number of dimensions. So for a distribution on three axes, P(x,y,z | I), the marginal distribution over z would be:

from which it can be seen that the marginal distribution over y and z (the distribution for x, irrespective of what y and z might be) is:

This last formula can be simplified, if y and z are conditionally independent, i.e. if P(y | z, I) = P(y | I).

Related Entries:

Nuisance Parameter

Related Blog Posts:

Maximum Entropy Principle

The maximum entropy principle is a mathematical prescription for assigning probability distributions. It is a way of figuring out an appropriate probability distribution, capturing the information one has on the problem in hand, without assuming any additional information, (beyond the necessary model assumptions), that is not possessed.

Of all the possible distributions consistent with the constraints of a given problem, the one for which the information-theoretic entropy is maximum is the one that is maximally non-committal. It entails no stronger belief than that which is inherently justified by the structure of the problem. This distribution assumes no additional information, beyond what is already known and encoded in the problem constraints, and thus provides the optimal description of the corresponding state of knowledge.

The maximum entropy principle is often used to assign prior probability distributions, before the subsequent use of Bayes' theorem to analyze the effect of some data set. It is perhaps most easily thought of as a means of providing uninformative priors. It is also possible, however, to use it for the direct assignment of posterior distributions. (The distinction between prior and posterior is arbitrary, so the theory would be inconsistent if this were not so.)

The information-theoretic entropy (also known as the Shannon entropy), denoted H, is given by the formula:

H = -Σi pi × log(pi)

where pi is the probability associated with the ith hypothesis in some discrete hypothesis space. The maximum entropy principle states that the distribution that maximizes this function is the one consistent with rationality.

A version of the above formula exists for continuous distributions.

Given a finite set of discrete hypotheses, and no further information, the maximum-entropy distribution is uniform over the hypothesis space.

For a continuous distribution with known mean and standard deviation, and no further information, entropy is maximized with the normal distribution.

Related Entries:

Related Blog Posts:

Entropy of Kangaroos introduces a very simple application example.

Entropy Games discusses the meaning of entropy.

Monkeys and Multiplicity provides an intuitive argument for the correctness of the principle.

The Acid Test of Indifference derives the uniform prior from MEP.

Maximum Likelihood

Maximum likelihood is an approximate method of parameter estimation, which involves finding the peak of the likelihood function, P(D | HI).

The method is closely associated with the frequentist tradition. It does not comply with Bayes' theorem, and it therefore does not make maximal use of available information. Its validity is limited to cases where the prior distribution is uniform, or negligibly informative, relative to the data.

Conventionally, when hypothesis testing is cast in the language of parameter estimation, the hypothesis, H, is denoted as a set of model parameters, θ = (θ1, θ2, ...), and the likelihood function is written:

The maximum-likelihood estimate of θ is often denoted with a circumflex:

The argmax() function gives the argument of L(θ), θ, at which L(θ) is maximized. Note, it is not the value of L(θ) at its maximum.

When the functional form of L(θ) is known, argmax() is often found by setting the derivative of L(θ) equal to zero and solving.

When the data set, D, consists of several individual data points, D = (d1, d2, ...), and when a normally distributed noise model is used, maximizing L(θ) amounts to minimizing the χ2 statistic (as shown in the entry on parameter estimation):

Here y(x) represents the noise PDF. If the standard deviation of the noise distribution can be treated as constant over all data points, this reduces even further, to the method of least squares, in which the sum of the squared residuals, S, is minimized:

Analytical solutions to the least-squares method exist in some cases. For example, a polynomial model of arbitrary degree can be easily solved, as shown here. For optimization of either test statistic, S or χ2, in more complex situations, numerical solvers such as the Gauss-Newton method or the related Levenberg-Marquardt algorithm can be used.

Related Entries:

Related Blog Posts:

External Links:

Mean

Most generally, the arithmetic mean of a list of numbers is the average: the sum of all the numbers divided by the count of the numbers. It can thus be seen to characterize a global property of the list.

Applied to a probability distribution, the list of numbers in question corresponds to the complete set of hypotheses in the relevant hypothesis space. However, we must consider that each element of the hypothesis space appears in the list more than once, the number of appearances of each being proportional to the probability assigned to it. Thus the mean of a probability distribution is sometimes referred to as a weighted average.

The mean of a probability distribution, often denoted by the greek letter μ (mu), is also known as the expectation, denoted by angle brackets:

μx = 〈X〉

From the weighted-average argument, we can see that for a discrete distribution, the mean is calculated using the formula:

Here, the Σ (upper-case sigma) denotes summation over the entire hypothesis space. This is actually a special case, as a discrete probability distribution can be defined on a continuous space, with the probability distribution function consisting of weighted delta functions. Thus, the more general formula for the mean (over a continuous space) is given by converting the discrete summation to an integral:

The mean of a distribution provides one measure of its location. Another measure of location is the median. The distinction between mean and median is laid out below. Another measure of location is the point of maximum probability, termed the peak, or mode. The peak and the mean often do not coincide. Many distributions do not even not possess a unique peak. The choice between different measures of location depends on how one measures cost: if it is most profitable to minimize the expected square of the difference between a parameter's estimate and its true value, then the mean is the optimal choice.

A probability distribution is often described metaphorically as consisting of mass. Within this metaphor, the mean is seen to correspond to the centre of mass: if the shape of the distribution were to be realized in some material of uniform density, such as a block of wood, then the point at which the object could be made to balance is the mean. At each point in the hypothesis space, from the law of the lever, the important number is the product of the distance of that point from the balance point and the amount of probability (mass) assigned to that point. The mean is thus the location at which the sum of all these products is zero (distances to the right are positive and to the left are negative), hence the wooden model can be made to balance at that point.

While the mean is considered as corresponding to the centre of mass, the median is the midpoint of a distribution: it is the point at which the total amount of probability to the left equals the amount to the right. For the mean, recall that the corresponding sum is weighted by distance, indicating how the mean and median are distinct.

As indicated, the terms mean and average are often used interchangeably, but there is a slight danger here. The mean of a probability distribution is a property of our available information on some set of propositions. The term average, however, suggests the result of a some real experiment, though this latter strictly corresponds to a frequency distribution, which is typically not the same as a probability distribution. See entry on the frequency interpretation of probability for more detail.

The mean of a distribution over x is also the x coordinate of the centroid of its graph, so the term centroid is sometimes used as a synonym for the mean.

As indicated, the terms mean and average are often used interchangeably, but there is a slight danger here. The mean of a probability distribution is a property of our available information on some set of propositions. The term average, however, suggests the result of a some real experiment, though this latter strictly corresponds to a frequency distribution, which is typically not the same as a probability distribution. See entry on the frequency interpretation of probability for more detail.

The mean of a distribution over x is also the x coordinate of the centroid of its graph, so the term centroid is sometimes used as a synonym for the mean.

Related Entries:

Median

The median of a probability distribution is a measure of the probability distribution's location. Other such measures include the mean and mode - generally these all refer to different locations in the hypothesis space, but under special circumstances they can coincide.

The median, m, is the midpoint of a distribution, the point at which the total amount of probability to the left equals the amount of probability to the right. It is therefore specified by setting the integrals up to (down to) m, from the left (right) equal:

The assignment of one half follows from the definition above, and the normalization condition for probability distributions.

The median is distinct from the mean, in the same way that the midpoint is distinct from the centre of mass. The midpoint only cares about the amount of mass on either side, and is not influenced by the relative locations of the constituent masses. The centre of mass, however, is determined not from summing constituent masses, but rather by summing the products of those masses with their distances from some point in space. (This follows from the law of the lever.)

The assignment of one half follows from the definition above, and the normalization condition for probability distributions.

The median is distinct from the mean, in the same way that the midpoint is distinct from the centre of mass. The midpoint only cares about the amount of mass on either side, and is not influenced by the relative locations of the constituent masses. The centre of mass, however, is determined not from summing constituent masses, but rather by summing the products of those masses with their distances from some point in space. (This follows from the law of the lever.)

Related Entries:

Meta-analysis

Meta-analysis is a form of systematic review, aimed at pooling data from a number of different studies, often performed by different researchers, in order to assess the totality of evidence available on some particular research question.

Studies for inclusion in a meta-analysis are usually drawn from the peer-reviewed literature. A good-quality meta-analysis will aim to include all such studies, and so will involve collecting all search results, for some appropriate set of keywords, using one or more major research databases.